LangChain is a framework that provides AI developers with tools, components, and abstractions for building applications that use large language models (LLMs).

Gloo AI Gateway is compatible with GenAI applications built with LangChain and, when combined, creates a more robust architecture that can enhance the development deployment of GenAI applications in your organization.

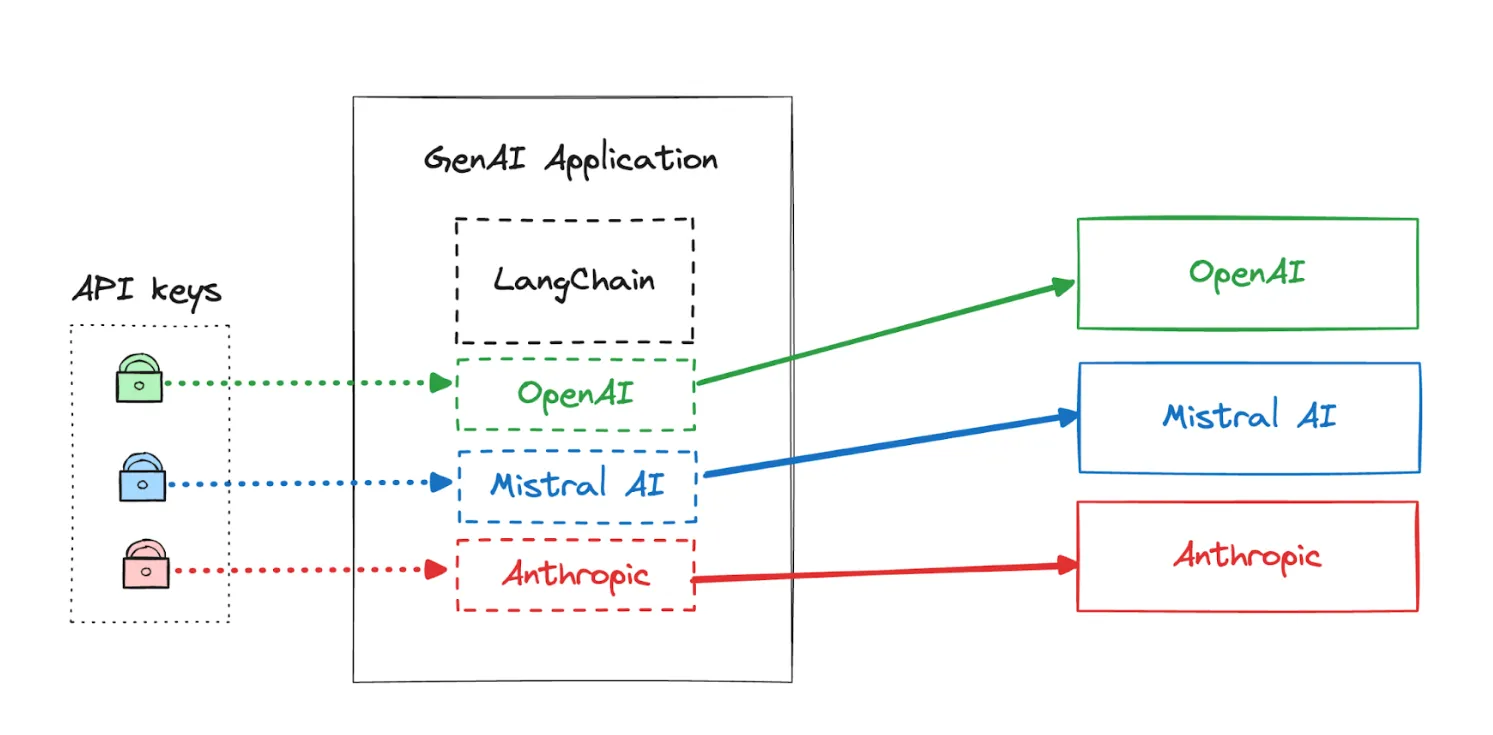

The “Fat Client” Approach

When using LangChain, the framework directly accesses the LLM providers you might be using. Moreover, you must be very explicit in deciding which LLM provider you want to use, as there are separate packages to install and classes you must instantiate. As the framework is part of your applications, any framework or module version updates will require a rebuild and redeployment of the applications.

For example, suppose you want to use Open AI. In that case, you’d install the langchain-openai module, ensure API key is securely set and stored where your application can access it, and then use the ChatOpenAI to create an instance of the client you can use:

Similarly, you’d have to do the same if using Mistral AI, Anthropic, or any other provider. That is, install more modules (langchain-anthropic and langchain-openai), obtain and securely set and use API keys, and instantiate chat or other classes from those modules to implement your application.

Assuming your organization is running multiple applications and factoring in the frequent module and application upgrades, managing all this can become complex and error-prone.

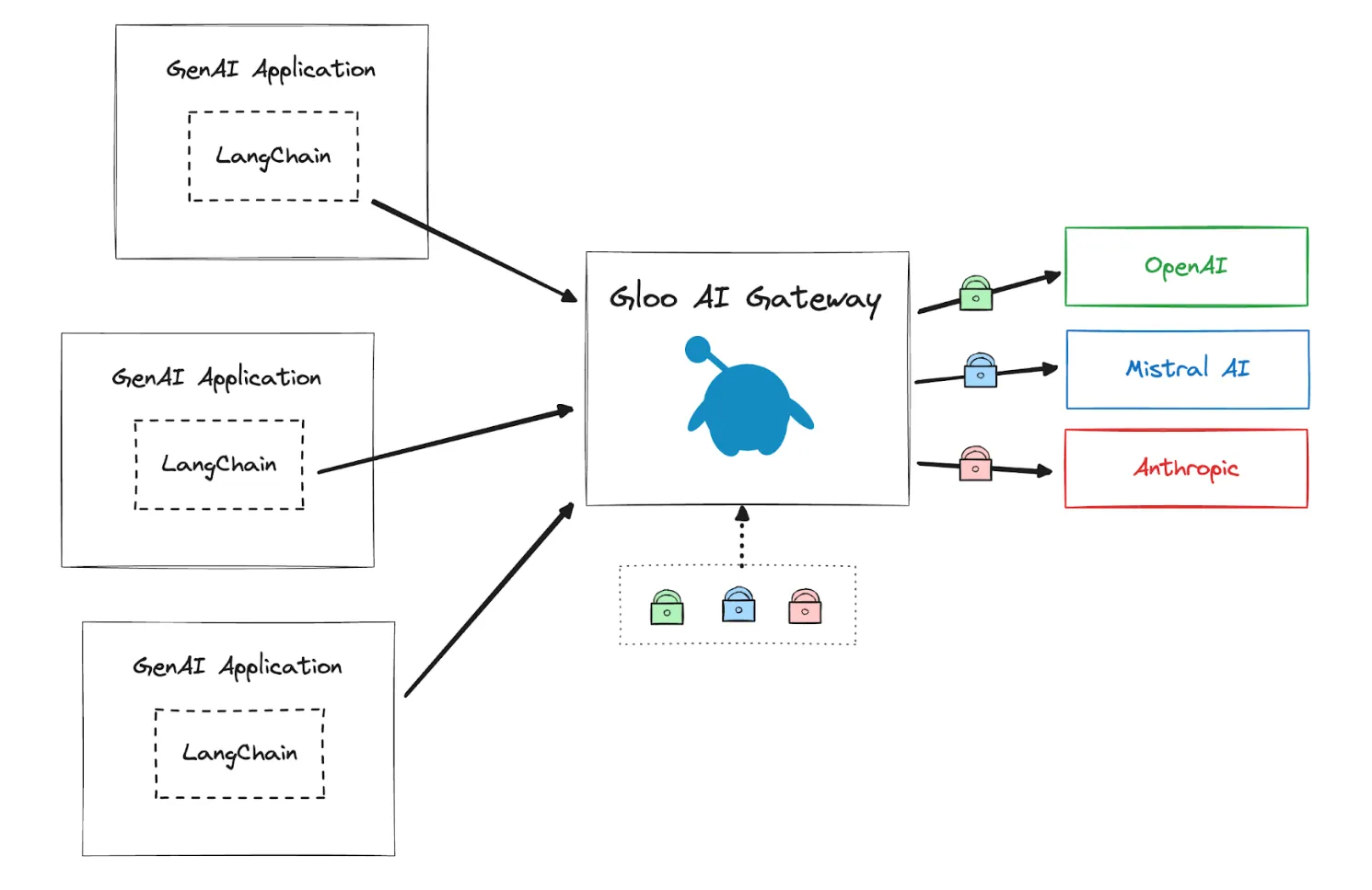

A Different AI Gateway Approach

One of the ideas behind the Gloo AI Gateway is to provide a unified way of providing functionality to GenAI applications and moving the burden of framework upgrades and application rebuilds due to changing dependencies, API key management, access control, rate limiting, and other concerns from the applications and application developers and offload it to a gateway where these are handled and managed by the operations team.

This architecture brings additional benefits. The Gloo AI Gateway’s central position allows us to implement features such as additional security and access control, observability, rate limiting and cost control, semantic caching, and others uniformly and across all your applications.

LangChain and Gloo AI Gateway

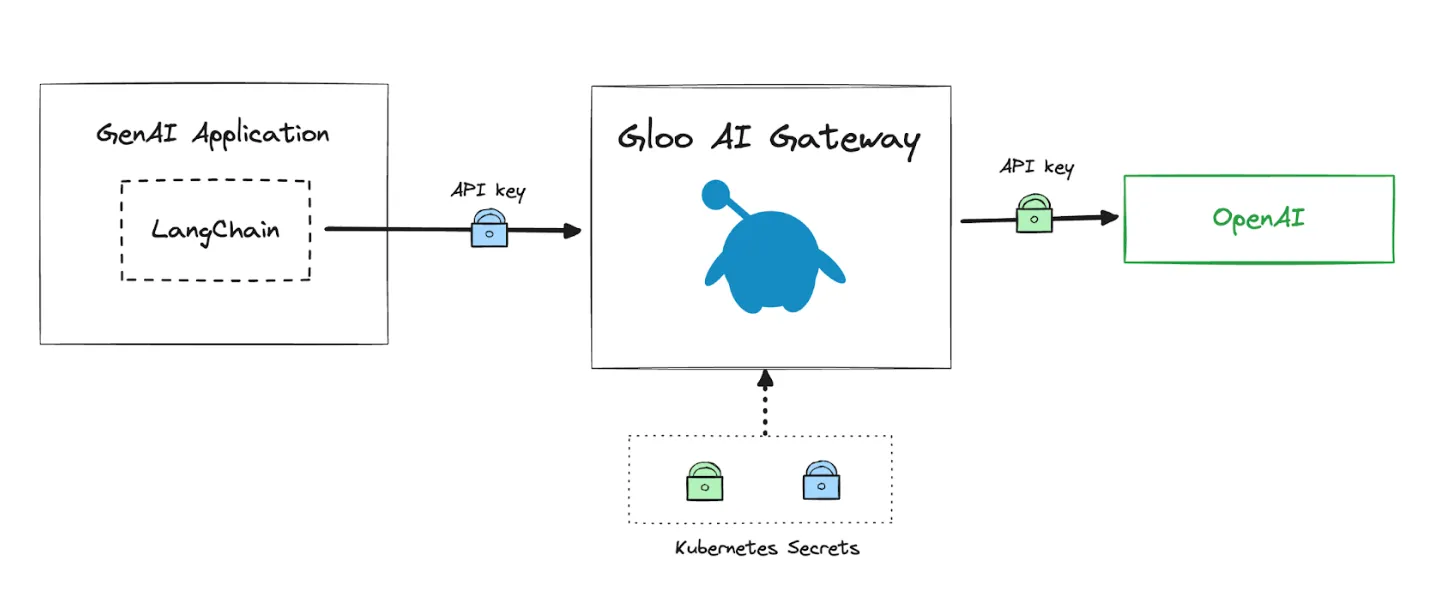

Integrating your LangChain applications with Gloo AI Gateway is as simple as instantiating the provider-specific classes with the Gloo AI Gateway. You can keep using your existing code and LangChain features!

Let’s take a look at a couple of examples. We have deployed a Gloo AI Gateway, and the first step is to create a Kubernetes Secret to store the LLM provider API key.

To register an LLM provider with the Gloo AI Gateway, we’ll use the Upstream resource and reference the Kubernetes Secret. Gloo AI Gateway will use the API key from the secret when accessing the LLM provider.

Lastly, we use the HTTPRoute resource from the Kubernetes Gateway API to specify routing behavior to the Upstream:

This route listens to requests sent to the /v1 path and routes the traffic to the Open AI upstream resource.

The flexibility of Kubernetes Gateway API allows you to configure listeners, paths, and routing to one or more backend upstreams in a way that suits your use case. For example, if you have multiple LLM backends, you’d create separate HTTPRoute that route the traffic based on different rules, including matching on paths, headers, or query parameters. You can also use HTTPRoute filters and modify request and response headers, mirror requests or rewrite the URLs. You

With the combination of the Kubernetes Secret, Upstream, and HTTPRoute resources, you already have a functioning API gateway for AI scenarios!

Let’s see how to modify the code from earlier to use LangChain with Gloo AI Gateway:

When instantiating the ChatOpenAI class, we provide the base URL that points to the Gloo AI Gateway and provides a value in the api_key field. Note that the reason api_key must be specified here is because LangChain uses Pydantic to verify and enforce a value for the API key.

While this is enough to get the Gloo AI Gateway to work with LangChain, we are leaving the gateway exposed and open for anyone to access Gloo AI Gateway supports multiple ways to authenticate incoming requests – basic authentication, API keys, LDAP, OAuth, OPA and even a passthrough authentication option where you can use your own service for authentication.

Let’s see how we can configure a simple API key authentication for the Gloo AI Gateway. We’ll start by creating a Kubernetes Secret called ai-gw-openai-apikey, as this will be the API key protecting the Open AI route on the Gloo AI Gateway.

The next step is to create an AuthConfig resource that describes the type of authentication we want to use, including the header name Gloo AI Gateway will check for the API key and a label selector that matches the secret we created:

Finally, we can create a RouteOption resource that references the AuthConfig and include the RouteOption as a filter in the HTTPRoute:

With this configuration applied, the original code will now fail with an authentication error, so we must provide a correct API key, for example, reading it from the environment variable:

Closing Thoughts on LangChain and Gloo AI Gateway

Integrating LangChain with Gloo AI Gateway provides a flexible and secure architecture for developing and deploying AI applications. This approach centralizes key management, access control, and other operational concerns, allowing developers to focus on building innovative applications. There are no additional modules or libraries to install, and you can keep using your existing LangChain implementation.

To learn more about Gloo AI Gateway, take a look at the demo videos and try one of the interactive labs.

%20a%20Bad%20Idea.png)

%20For%20More%20Dependable%20Humans.png)