My first real exposure to GitOps was while working on a project for an Amazon subsidiary where we used AWS technologies like CloudFormation. That was nice, but I yearned for something more general that wouldn’t lock me in to a single cloud provider.

My real GitOps “conversion” happened some time later when I was working as an architect on a 10-week digital modernization project for a U.S. public-sector client. We had an ambitious goal and were feeling a bit of schedule pressure. One Monday morning we arrived at the client’s workspace only to discover that our entire Kubernetes development environment was mysteriously wiped out. The temptation was to try to understand what happened and fix it. But our project SREs had constructed everything using a still-new GitOps platform called Argo Continuous Delivery (Argo CD). Within minutes they had reconstructed our environment from the “spec” stored in our git repo. We avoided a very costly delay, and at least one new GitOps fanboy was born.

Fast-forward a few years, and now as a Field Engineer for solo.io, many clients ask us about best practices for using GitOps with Gloo products. It’s one of my favorite questions to hear, because it gives us an opportunity to discuss the strengths of our products’ cloud-native architecture. For example, the fact that Gloo configuration artifacts are expressed as YAML in Kubernetes Custom Resource Definitions (CRDs) means that they can be stored as artifacts in a git repo. That means they fit hand-in-glove with GitOps platforms like Flux and Argo. It also means that they can be stored at runtime in native Kubernetes etcd storage. That means there are no external databases to configure with the Gloo API Gateway.

Are you thinking of adopting an API gateway like Gloo Gateway? Would you like to understand how that fits with popular GitOps platforms like Argo? Then this post is for you.

Give us a few minutes, and we’ll give you a Kubernetes-hosted application accessible via an Envoy-based gateway configured with policies for routing, service discovery, timeouts, debugging, access logging, and observability. And we’ll manage the configuration entirely in a GitHub repo using the Argo GitOps platform.

Not interested in using Argo with Gloo Gateway today? Not a problem. Check out one of the other installments in this hands-on tutorial series that will get you up and running without the Argo dependency:

- Install-Free Experience on Instruqt

- Local KinD Cluster

If you have questions, please reach out on the Solo Slack channel.

Ready? Set? Go!

Prerequisites

For this exercise, we’re going to do all the work on your local workstation. All you’ll need to get started is a Docker-compatible environment such as Docker Desktop, some CLI utilities like kubectl, kind, git, and curl, plus the Argo utility argocd. Make sure these are all available to you before jumping into the next section. I’m building this on MacOS but other platforms should be perfectly fine as well. If you’d prefer using a Kubernetes distribution other than kind, this tutorial should still work fine with minimal or no changes.

INSTALL Platform Components

Let’s start by installing the platform and application components we need for this exercise.

Install KinD

To create a local Kubernetes cluster in your Docker container, simply run the following:

After that completes, verify that the cluster has been created:

The output should look similar to below:

Install Gateway API CRDs

The Kubernetes Gateway API is an important new standard that represents the next generation of Kube ingress. Its abstractions are expressed using standard

custom resource definitions (CRDs). This is a great development because it helps to ensure that all implementations who support the standard will maintain compliance, and it also facilitates declarative configuration of the Gateway API. Note that these CRDs are not installed by default, ensuring that they are only available when users explicitly activate them.

Let’s install those CRDs on our cluster now.

Expect to see this response:

Install Argo CD Platform

Let’s start by installing Argo CD on our Kubernetes cluster.

We’ll change the username / password combination from the default to admin / solo.io.

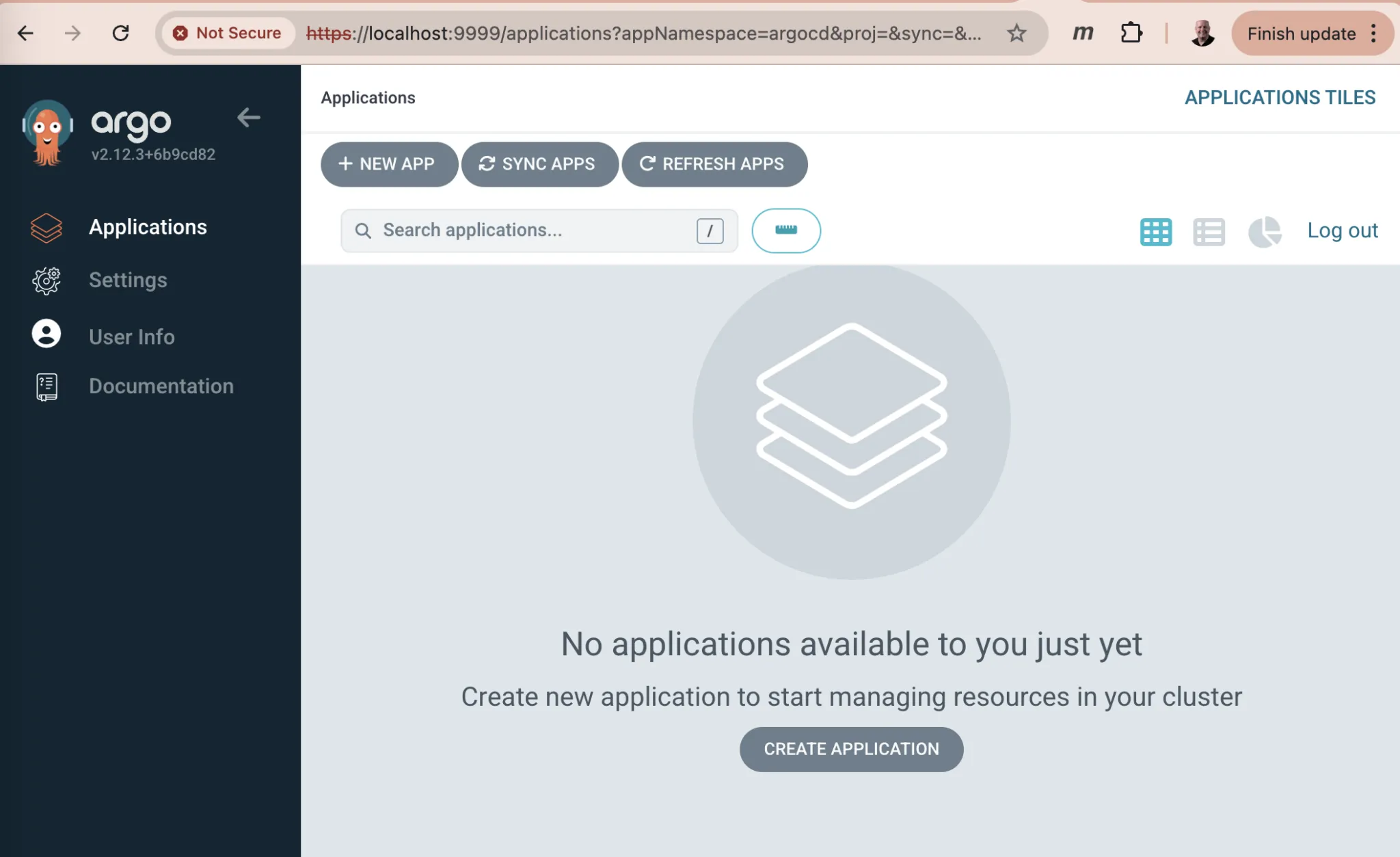

We can confirm successful installation by accessing the Argo CD UI using a port-forward to http://localhost:9999:

Installation Troubleshooting

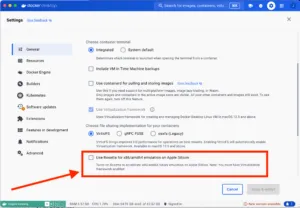

If you encounter errors installing Gloo Gateway on your workstation, like a message indicating that a deployment is not progressing, then your local Docker installation may be under-resourced. For reference, I’m running through this exercise on an M2 Mac with 64 GB of memory. My Docker Desktop reports that my container running all these components is consuming on average one-third of the 7.5 GB of memory and 20% of the 12 CPU cores available to it.

If you’re running this exercise on an M1/M2/M3 Mac, and are hosting the kind cluster in Docker Desktop, then you may encounter installation failures due to this Docker issue. The easiest workaround is to disable Rosetta emulation in the Docker Desktop settings. (Rosetta is enabled by default.) Then installation should proceed with no problem.

Clone Configuration Template Repo

The magic of Argo and similar GitOps platforms is that users declare the configuration they want in a git repository. The Argo controller then uses that stored configuration to maintain the state of the live Kubernetes deployments. Say good-bye to kubectl patch on production systems.

In order to establish our configuration repo, let’s clone a template stored in GitHub to a fresh repo under your GitHub account. Once you’ve created an empty repo, then use the script below to populate it. Be sure to use your repo’s URL in the git remote command below.

You’ll use this cloned repository on your GitHub account to manage the configuration that will control the state of the gateway and services deployed in your live Kubernetes cluster.

Install Glooctl Utility

GLOOCTL is a command-line utility that allows users to view, manage, and debug Gloo Gateway deployments, much like a Kubernetes user employs the kubectl utility. Let’s install glooctl on our local workstation:

We’ll test out the installation using the glooctl version command. It responds with the version of the CLI client that you have installed. However, the server version is undefined since we have not yet installed Gloo Gateway. Enter:

Which responds:

Install Gloo Gateway

The Gloo Gateway documentation describes how to install the open-source version on your Kubernetes cluster using helm. In our case, in keeping with our GitOps theme, we have configured an Argo Application Custom Resource that we’ll use to declare how we want our Gateway configured. Under the covers, the Argo controller will use helm to carry out the installation, but all we’ll need to manage is this Application resource.

Ensure that you’re in the top-level directory of the cloned repository, and then use kubectl to apply this configuration to your cluster:

After a minute or so, your Gateway instance should be deployed. Confirm by checking on the status of the Gloo control plane.

You should soon see a response like this:

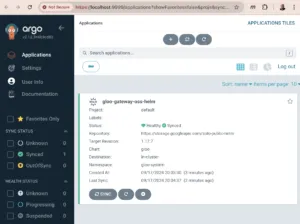

You can also revisit the Argo CD UI and confirm that there is now a single Application panel in a greenSynced status.

That’s all that’s required to install Gloo Gateway. Notice that we did not install or configure any kind of external database to manage Gloo artifacts. That’s because the product was architected to be Kubernetes-native. All artifacts are expressed as Kubernetes Custom Resources, and they are all stored in native etcd storage. Consequently, Gloo Gateway leads to more resilient and less complex systems than alternatives that are either shoe-horned into Kubernetes or require external moving parts.

Note that everything we do in this getting-started exercise runs on the open-source version of Gloo Gateway. There is also an enterprise edition of Gloo Gateway that adds features to support advanced authentication and authorization, rate limiting, and observability, to name a few. You can see a feature comparison here. If you’d like to work through this blog post using Gloo Gateway Enterprise instead, then request a free trial here.

Install htttpbin Application

HTTPBIN is a great little REST service that can be used to test a variety of http operations and echo the response elements back to the consumer. We’ll use it throughout this exercise. We’ll install the httpbin service on our Kubernetes cluster using another Argo Application. Customize the repoURL in the template below to point to your GitHub account, so that it will be used by Argo as the source of truth for your configuration. The path parameter below the repoUrl indicates where the configuration for this particular Application lies within the configured repo. We’ll be adding and modifying configuration files at this location to manage the routing rules for this Application.

After customizing the Application YAML above, apply that using kubectl to create the deployment in your cluster.

You should see:

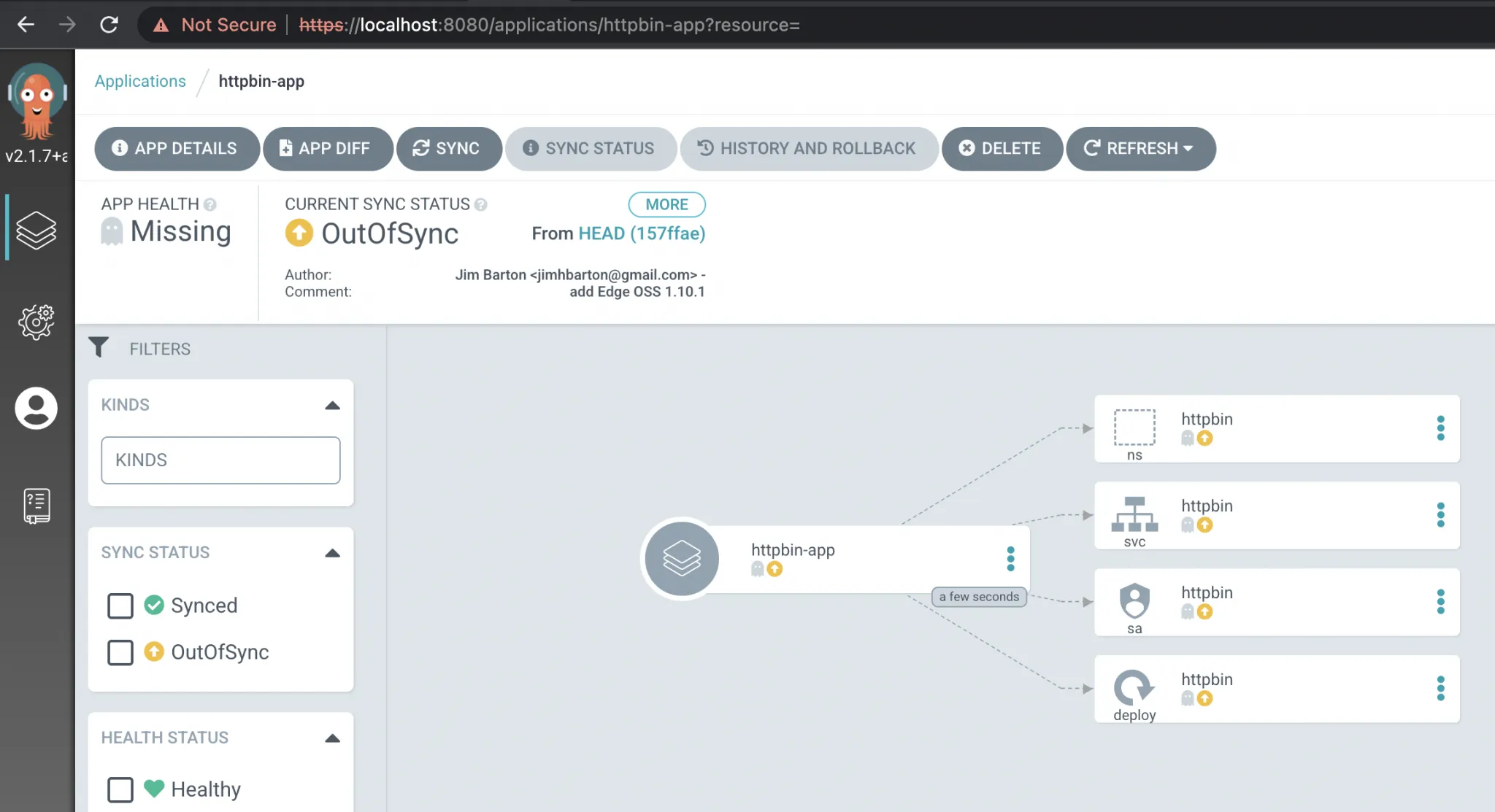

Revisit the Argo console and there should be two applications visible, one for Gloo Edge itself and another for the httpbin application. The UI below shows the initial detailed view of httpbin-app.

You may notice that all the elements of the app are listed as being OutOfSync. That goes back to our decision to use an empty syncPolicy for the httpbin Argo Application. This means we’ll need to manually initiate synchronization between the repo and our Kubernetes environment. You may now want use this setting in a production environment, as you generally want the Argo controller to maintain the application state as close as possible to the state of the application repo. But in this case, we maintain it manually so we can more clearly see how the state of the application changes over time.

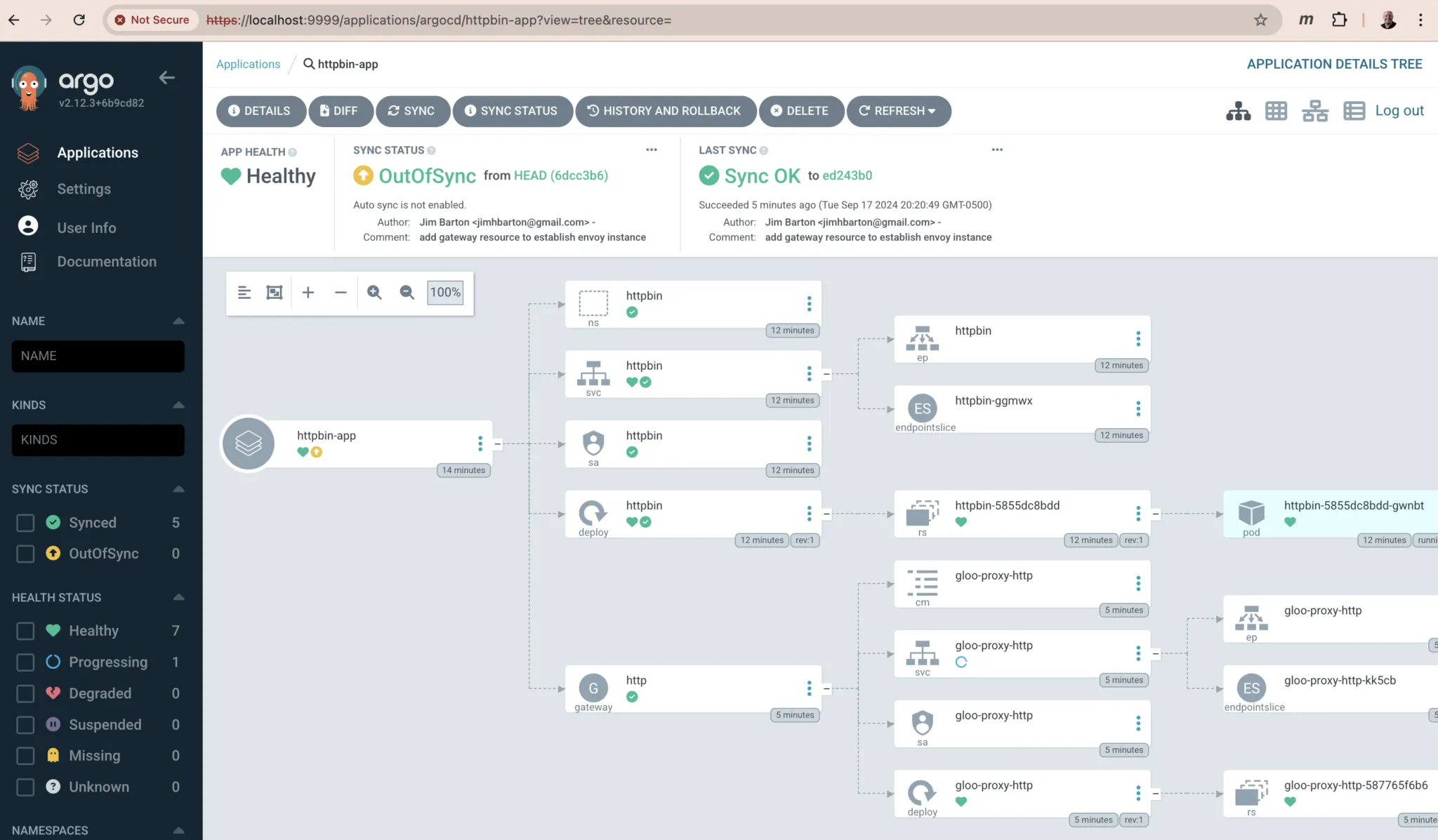

If you press the SYNC button in the UI, then the Argo controller will install httpbin in your cluster, spin up a pod, and all your indicators should turn green, something like this:

You can also confirm that the httpbin pod is running by searching for pods with an app label of httpbin in the application’s namespace:

And you will see something like this:

CONTROL Routing Policies with Argo CD

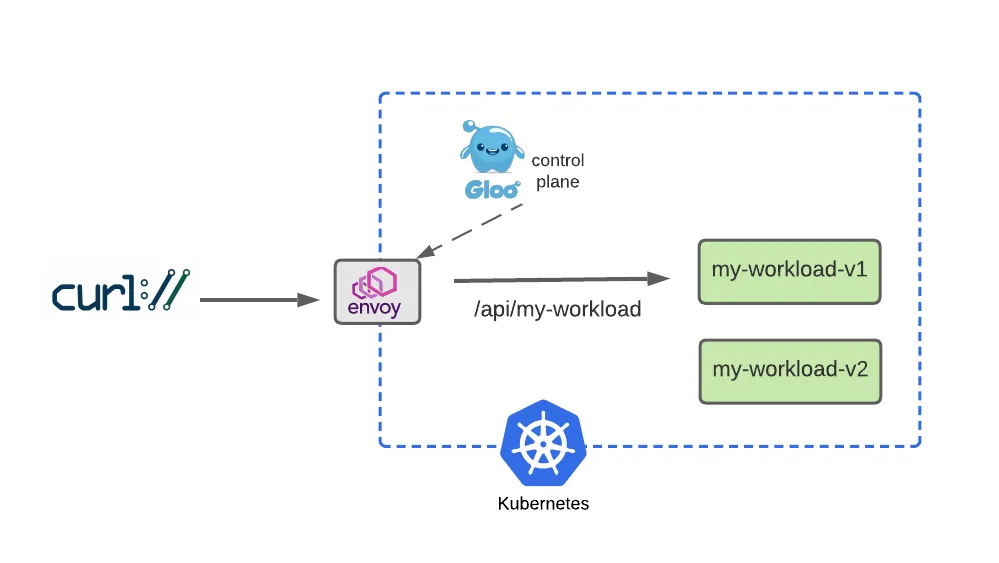

At this point, you should have a Kubernetes cluster and the Gateway APIs configured, along with our sample httpbin service, the glooctl CLI and the core Gloo Gateway services. These servicesis includes both an Envoy data plane and the Gloo control plane. Now we’ll configure a Gateway listener, establish external access to Gloo Gateway, and test the routing rules that are the core of the proxy configuration.

Configure a Gateway Listener

Let’s begin by establishing a Gateway resource that sets up an HTTP listener on port 8080 to expose routes from all our namespaces. Gateway custom resources like this are part of the Gateway API standard.

We’ll add this to our kind cluster by first copying it from our library directory to the active config directory within our Argo repo. Then we’ll commit the change to GitHub.

Because of our empty syncPolicy on this Argo application, this change will not be automatically deployed to our Kubernetes cluster. So we’ll activate the Argo controller using the argocd CLI to recognize the change and synchronize this new Gateway config to our kind cluster and establish an Envoy proxy. This is equivalent to the SYNC operation we performed in the UI earlier.

If you are not logged into Argo from the command-line, you’ll first to authenticate yourself:

Provide the credentials as with the UI earlier (username admin, password solo.io), and argocd should establish a session for you.

Now issue this command to kickoff the sync and establish an Envoy proxy instance to handle external requests:

Confirm that Gloo Gateway has spun up an Envoy proxy instance in response to the creation of this Gateway object by deploying gloo-proxy-http:

Expect a response like this:

Establish External Access to Proxy

You can skip this step if you are running on a “proper” Kubernetes cluster that’s provisioned on your internal network or in a public cloud like AWS or GCP. In this case, we’ll be assuming that you have nothing more than your local workstation running Docker.

Because we are running Gloo Gateway inside a Docker-hosted cluster that’s not linked to our host network, the network endpoints of the Envoy data plane aren’t exposed to our development workstation by default. We will use a simple port-forward to expose the proxy’s HTTP port for us to use. (Note that gloo-proxy-http is Gloo’s deployment of the Envoy data plane.)

This returns:

With this port-forward in place, we’ll be able to access the routes we are about to establish using port 8080 of our workstation.

Configure Simple Routing with an HTTPRoute

Let’s begin our routing configuration with the simplest possible route to expose the /get operation on httpbin. This endpoint simply reflects back in its response the headers and any other arguments passed into the service with an HTTP GET request. You can sample the public version of this service here.

HTTPRoute is one of the new Kubernetes CRDs introduced by the Gateway API, as documented here. We’ll start by introducing a simple HTTPRoute for our service.

This example attaches to the Gateway object that we created in an earlier step. See the gloo-system/http reference in the parentRefs stanza. The Gateway object simply represents a host:port listener that the proxy will expose to accept ingress traffic.

Our route watches for HTTP requests directed at the host api.example.com with the request path /get and then forwards the request to the httpbin service on port 8000.

Let’s establish this route by committing this config to our Argo repo and then activating the Argo controller:

If you REFRESH the Argo httpbin-app display, you can see that the new HTTPRoute httpbin has been detected in the repo. (Isn’t it nice that all Gloo Edge abstractions like HTTPRoute are expressed as Kubernetes Custom Resources, so that they are all first-class citizens in our Argo UI?) But our HTTPRoute is flagged as being OutOfSync. When you initiate a SYNC from this UI (or the command-line), the app and the HTTPRoute will turn green as it is applied to our Kubernetes cluster.

Test the Simple Route with Curl

Now that Argo has established our HTTPRoute, let’s use curl to test the route from outside our cluster. We’ll display the response with the -i option to additionally show the HTTP response code and headers.

This command should complete successfully:

Note that if we attempt to invoke another valid endpoint /delay on the httpbin service, it will fail with a 404 Not Found error. Why? Because our HTTPRoute policy is only exposing access to /get, one of the many endpoints available on the service. If we try to consume an alternative httpbin endpoint like /delay:

Then we’ll see:

Explore Complex Routing with Regex Patterns

Let’s assume that now we DO want to expose other httpbin endpoints like /delay. Our initial HTTPRoute is inadequate, because it is looking for an exact path match with /get.

We’ll modify it in a couple of ways. First, we’ll modify the matcher to look for path prefix matches instead of an exact match. Second, we’ll add a new request filter to rewrite the matched /api/httpbin/ prefix with just a / prefix, which will give us the flexibility to access any endpoint available on the httpbin service. So a path like /api/httpbin/delay/1 will be sent to httpbin with the path /delay/1.

Here are the modifications we’ll apply to our HTTPRoute:

Let’s use Argo to apply the modified HTTPRoute and test. The script below removes the original HTTPRoute YAML from our live configuration directory and replaces it with the one described above. It then commits and pushes those changes to GitHub. Finally, it uses the argocd CLI to force a sync on the artifacts of httpbin-app.

Test Routing with Regex Patterns

When we used only a single route with an exact match pattern, we could only exercise the httpbin /get endpoint. Let’s now use curl to confirm that both /get and /delay work as expected.

Perfect! It works just as expected! For extra credit, try out some of the other endpoints published via httpbin as well, like /status and /post.

Test Transformations with Upstream Bearer Tokens

What if we have a requirement to authenticate with one of the backend systems to which we route our requests? Let’s assume that this upstream system requires an API key for authorization, and that we don’t want to expose this directly to the consuming client. In other words, we’d like to configure a simple bearer token to be injected into the request at the proxy layer.

This type of use case is common for enterprises who are consuming AI services from a third-party provider like OpenAI or Anthropic. With Gloo Gateway, you can centrally secure and store the API keys for accessing your AI provider in a Kubernetes secret in the cluster. The gateway proxy uses these credentials to authenticate with the AI provider and consume AI services. To further secure access to the AI credentials, you can employ fine-grained RBAC controls. Learn more about managing authorization to an AI service with the Gloo AI Gateway in the product documentation.

But for this exercise, we will focus on a simple use case where we simply inject a static API key token directly from our HTTPRoute. We can express this in the Gateway API by adding a filter that applies a simple transformation to the incoming request. This will be applied along with the URLRewrite filter we created in the previous step. The new filters stanza in our HTTPRoute now looks like this:

Let’s apply this policy change by updating our Argo repository and activating the controller using the argocd CLI:

Expect this response:

Now we’ll test using curl:

Note that our bearer token is now passed to the backend system in an Authorization header.

Gloo technologies have a long history of providing sophisticated transformation policies with its gateway products, providing capabilities like in-line Inja templates that can dynamically compute values from multiple sources in request and response transformations.

The core Gateway API does not offer this level of sophistication in its transformations, but there is good news. The community has learned from its experience with earlier, similar APIs like the Kubernetes Ingress API. The Ingress API did not offer extension points, which locked users strictly into the set of features envisioned by the creators of the standard. This ensured limited adoption of that API. So while many cloud-native API gateway vendors like Solo support the Ingress API, its active development has largely stopped.

The good news is that the new Gateway API offers core functionality as described in this blog post. But just as importantly, it delivers extensibility by allowing vendors to specify their own Kubernetes CRDs to specify policy. In the case of transformations, Gloo Gateway users can now leverage Solo’s long history of innovation to add important capabilities to the gateway, while staying within the boundaries of the new standard. For example, Solo’s extensive transformation library is now available in Gloo Gateway via Gateway API extensions like RouteOption and VirtualHostOption.

MIGRATE

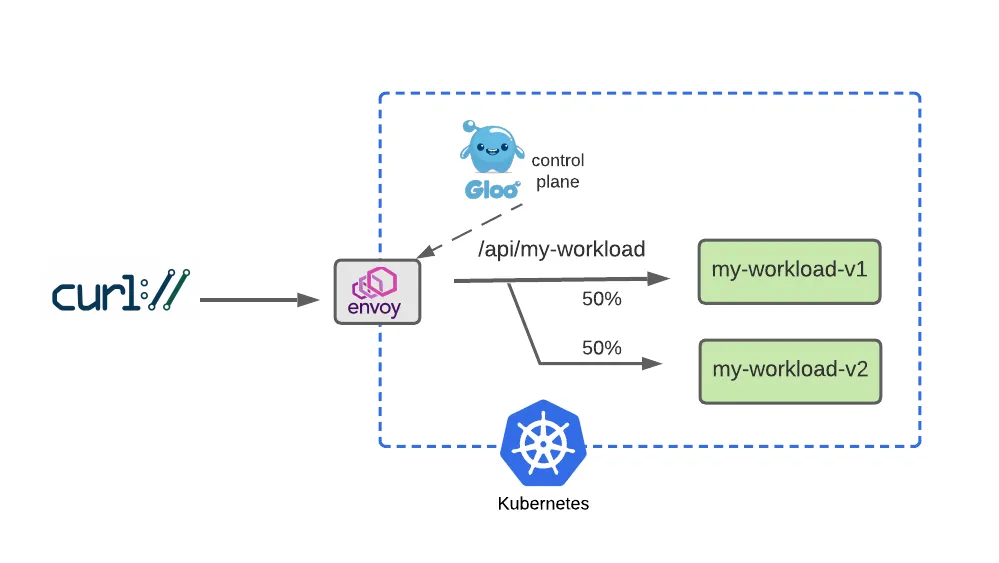

Delivering policy-driven migration of service workloads across multiple application versions is a growing practice among enterprises modernizing to cloud-native infrastructure. In this section, we’ll explore how a couple of common service migration techniques, dark launches with header-based routing and canary releases with percentage-based routing, are supported by the Gateway API standard.

Configure Two Workloads for Migration Routing

Let’s first establish two versions of a workload to facilitate our migration example. We’ll use the open-source Fake Service to enable this. Let’s establish a v1 of our my-workload service that’s configured to return a response string containing “v1”. We’ll create a corresponding my-workload-v2 service as well.

We’ll model these services as another Argo Application, so now we’ll have a total of three: one for Gloo Gateway itself, another for httpbin, and now a third for the my-workload service.

We’ll create the app in our Kubernetes cluster, and it will pull the initial service configuration from the repo we specified above.

Once the Argo app is created and synced, it will spin up both v1 and v2 flavors of the service in a new my-workload namespace. Confirm that the my-workload pods are running as expected using this command:

Expect a status showing two versions of my-workload running, similar to this:

You can also confirm from its web UI that there is a third app configured using Argo CD.

Test Simple V1 Routing

Before we dive into routing to multiple services, we’ll start by building a simple HTTPRoute that sends HTTP requests for host api.example.com whose paths begin with /api/my-workload to the v1 workload:

Now commit this route and sync it to our cluster using the Argo controller:

Once the sync is complete, use curl to test that this routing configuration was properly applied:

See from the message body that v1 is the responding service, just as expected:

Simulate a v2 Dark Launch with Header-Based Routing

Dark Launch is a great cloud migration technique that releases new features to a select subset of users to gather feedback and experiment with improvements before potentially disrupting a larger user community.

We will simulate a dark launch in our example by installing the new cloud version of our service in our Kubernetes cluster, and then using declarative policy to route only requests containing a particular header to the new v2 instance. The vast majority of users will continue to use the original v1 of the service just as before.

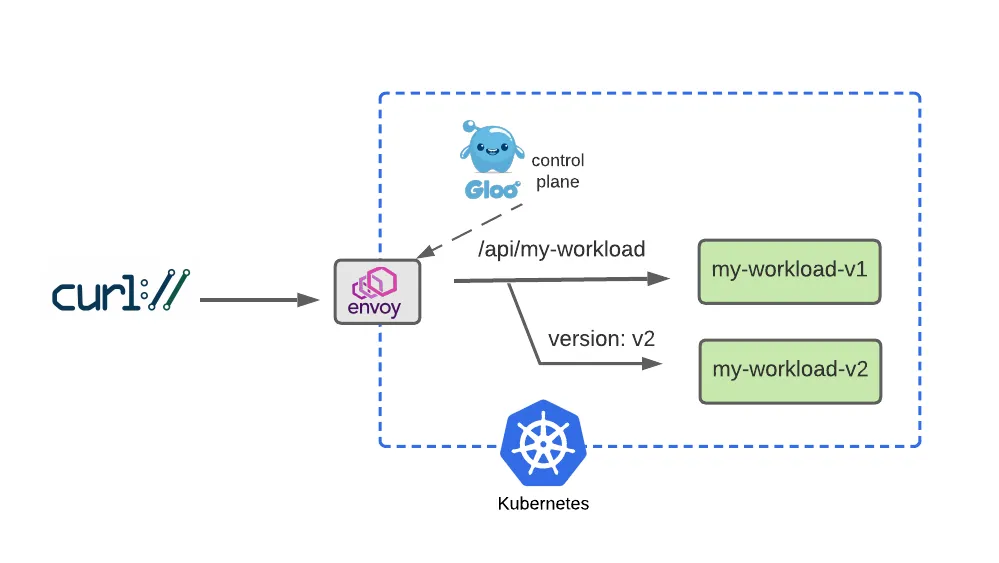

Configure two separate routes, one for v1 that the majority of service consumers will still use, and another route for v2 that will be accessed by specifying a request header with name version and value v2.

Let’s commit the modified HTTPRoute to our Argo repo and activate its controller to apply the change:

We’ll first confirm by testing the original route, with no special headers supplied, and see that traffic still goes to v1:

But it we supply the version: v2 header, note that our gateway routes the request to v2 as expected:

Our dark launch routing rule works exactly as planned!

Expand V2 Testing with Percentage-Based Routing

After a successful dark-launch, we may want a period where we use a blue-green strategy of gradually shifting user traffic from the old version to the new one. Let’s explore this with a routing policy that splits our traffic evenly, sending half our traffic to v1 and the other half to v2.

We will modify our HTTPRoute to accomplish this by removing the header-based routing rule that drove our dark launch. Then we will replace that with a 50-50 weight applied to each of the routes, as shown below:

Apply this 50-50 routing policy with Argo as we did before:

Now we’ll test this with a script that exercises this route 100 times. We expect to see roughly half go to v1 and the others to v2.

This result may vary somewhat but should be close to 50. Experiment with larger sample sizes to yield results that converge on 50%.

If you’d like to understand how Gloo Gateway and Argo CD can further automate the migration process, explore Gloo’s integration with Argo Rollouts with this blog and product documentation.

DEBUG

Let’s be honest with ourselves: Debugging bad software configuration is a pain. Gloo engineers have done their best to ease the process as much as possible, with documentation like this, for example. However, as we have all experienced, it can be a challenge with any complex system. In this slice of our 30-minute tutorial, we’ll explore how to use the glooctl utility to assist in some simple debugging tasks for a common problem.

Solve a Problem with Glooctl CLI

A common source of Gloo configuration errors is mistyping an upstream reference, perhaps when copy/pasting it from another source but “missing a spot” when changing the name of the backend service target. In this example, we’ll simulate making an error like that, and then demonstrating how glooctl can be used to detect it.

First, let’s apply a change to simulate the mistyping of an upstream config so that it is targeting a non-existent my-bad-workload-v2 backend service, rather than the correct my-workload-v2.

You should see:

When we test this out, note that the 50-50 traffic split is still in place. This means that about half of the requests will be routed to my-workload-v1 and succeed, while the others will attempt to use the non-existent my-bad-workload-v2 and fail like this:

So we’ll deploy one of the first weapons from the Gloo debugging arsenal, the glooctl check utility. It verifies a number of Gloo resources, confirming that they are configured correctly and are interconnected with other resources correctly. For example, in this case, glooctl will detect the error in the mis-connection between the HTTPRoute and its backend target:

The detected errors clearly identify that the HTTPRoute contains a reference to an invalid service named my-bad-workload-v2 in the namespace my-workload.

With these diagnostics, we can readily locate the bad destination on our route and correct it. Note that we achieved this using kubectl to make changes directly to the cluster. Since we have Argo configured for manual sync on this workload, the controller did not immediately override our changes. Instead, it would interpret the current state of our cluster as having suffered “drift” from the specified configuration in GitHub. So we’ll invoke argocd to sync the state of the cluster with our repo and fix the drift by reapplying the previous configuration. Then we’ll confirm that the glooctl diagnostics are again clean.

Re-run glooctl check and observe that there are no problems. Our curl commands to the my-workload services will also work again as expected:

OBSERVE Your API Gateway in Action

Finally, let’s tackle an exercise where we’ll learn about some simple observability tools that ship with open-source Gloo Gateway.

Explore Envoy Metrics

Envoy publishes a host of metrics that may be useful for observing system behavior. In our very modest kind cluster for this exercise, you can count over 3,000 individual metrics! You can learn more about them in the Envoy documentation here.

For this exercise, let’s take a quick look at a couple of the useful metrics that Envoy produces for every one of our backend targets.

First, we’ll port-forward the Envoy administrative port 19000 to our local workstation:

This shows:

For this exercise, let’s view two of the relevant metrics from the first part of this exercise: one that counts the number of successful (HTTP 2xx) requests processed by our httpbin backend (or cluster, in Envoy terminology), and another that counts the number of requests returning server errors (HTTP 5xx) from that same backend:

Which gives us:

As you can see, on my Envoy instance I’ve processed twelve good requests and two bad ones. (Note that if your Envoy has not processed any 5xx requests for httpbin yet, then there will be no entry present. But after the next step, that metrics counter should be established with a value of 1.)

If we apply a curl request that forces a 500 failure from the httpbin backend, using the /status/500 endpoint, I’d expect the number of 2xx requests to remain the same, and the number of 5xx requests to increment by one:

Now re-run the command to harvest the metrics from Envoy:

And we see the 5xx metric for the httpbin cluster updated just as we expected!

If you’d like to have more tooling and enhanced visibility around system observability, we recommend taking a look at an Enterprise subscription to Gloo Gateway. You can sign up for a free trial here.

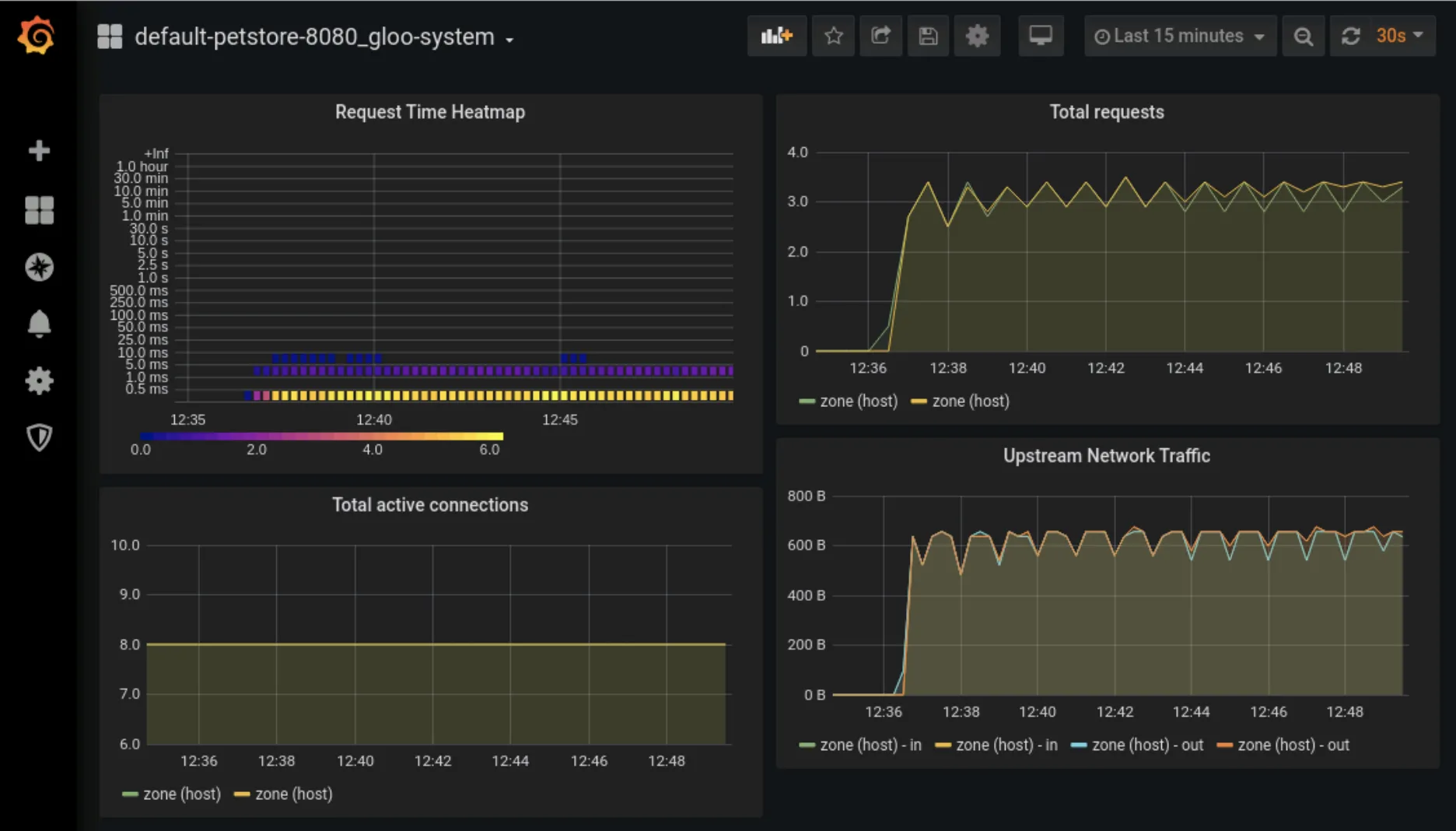

Gloo Gateway is easy to integrate with open tools like Prometheus and Grafana, along with emerging standards like OpenTelemetry. These allow you to replace curl and grep in our simple example with dashboards like the one below. Learn more Gloo Gateway’s OpenTelemetry in the product documentation. You can also integrate with enterprise observability platforms like New Relic and Datadog. (And with New Relic, you get the added benefit of using a product that has already adopted Solo’s gateway technology.)

Cleanup

If you’d like to cleanup the work you’ve done, simply delete the kind cluster where you’ve been working.

Learn More

In this blog post, we explored how you can get started with the open-source edition of Gloo Gateway and the Argo CD GitOps platform. We walked through the process of establishing an Argo configuration, then installing Applications to represent the Gloo Gateway product itself and manage two user services. We exposed the user services through an Envoy proxy instance and used declarative policies to manage simple routing, transformations, and migration between versions of a user service. We also looked briefly at debugging and observability tools. All of the configuration used in this guide is available on GitHub.

A Gloo Gateway Enterprise subscription offers even more value to users who require:

- Integration with identity management platforms like Okta and Google via the OIDC standard;

- Configuration-driven rate limiting;

- Securing your application network with WAF and ModSecurity rules, or Open Policy Agent; and

- An API Portal for publishing APIs using industry-standard Backstage.

For more information, check out the following resources.

- Explore the documentation for Gloo Gateway.

- Request a live demo or trial for Gloo Edge Enterprise.

- See video content on the solo.io YouTube channel.

- Questions? Join the Solo.io Slack community.

%20a%20Bad%20Idea.png)

%20For%20More%20Dependable%20Humans.png)