The future of API Management is Omni

Traditional API management is a cloud anti-pattern. It’s time to evolve API connectivity to work for the era of Kubernetes, platform engineering, and GenAI.

Customers winning with API Connectivity

Cloud-native foundation

Cloud-native API connectivity delivers cloud scale, performance, reliability, and flexibility by being built on the right foundation. Envoy Proxy is the world's leading cloud-native network proxy, built for the cloud by companies born in the cloud.

Every direction, any environment

API Connectivity is required for inbound, service-to-service, and outbound API traffic. An Omni gateway supports comprehensive API management in every direction with a federated control plane and a distributed data plane that runs across cloud providers, VMs, and serverless architectures.

Zero trust for APIs

Legacy API management solutions focus on securing public APIs while ignoring internal API traffic and third-party API consumption. An Omni gateway approach establishes a zero trust security posture, providing automatic authentication, authorization, encryption, and audit on all API traffic regardless of direction.

Unbundled API management

Monolithic API management suites lock you into vendor stacks that limit choice and stagnate innovation. An Omni approach builds on open source and open standards to place you at the center of a vibrant ecosystem of best-of-breed solutions for each stage of the API lifecycle.

Solo Enterprise for kgateway is the world’s leading Omni Gateway

Solo Enterprise for kgateway is an enterprise-grade cloud-native API connectivity solution that unifies API management across external, internal, and third-party APIs.

Built on the market-leading Envoy Proxy and kgateway, it brings API management into the cloud-native era, offering self-service to API development teams backed by automated guardrails that platform teams require to secure, scale, and monitor APIs in production.

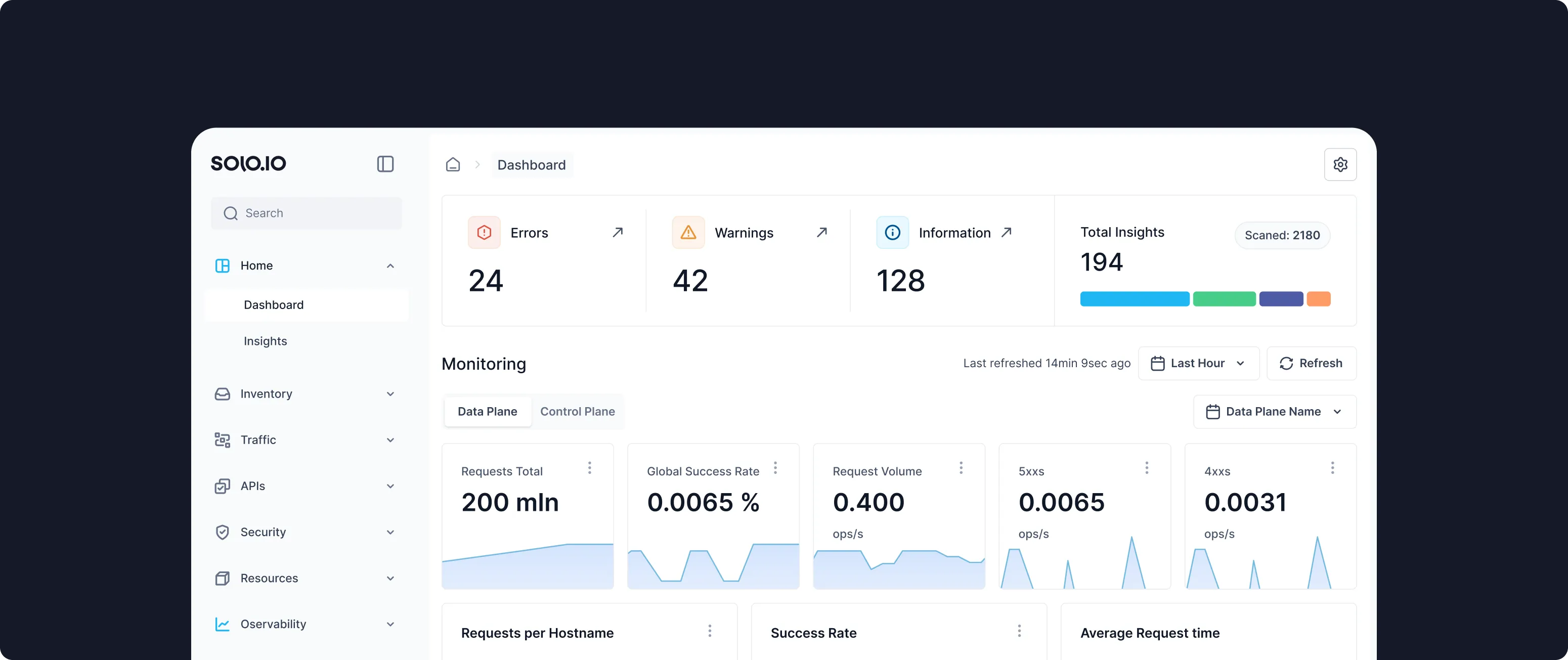

Analytics

& monetization

Advanced API metrics and analytics allow you to monitor API health and performance, measure business impact of API products, and monetize API consumption.

Developer portal

Modernize your public API presence with a React-based developer portal that is ready to deploy out of the box. Support internal teams with comprehensive Backstage integration for internal developer portals.

Multi-cluster

A federated control plane allows centralized deployment, management, and monitoring across on-premise and cloud environments. Delegated routing, failover, and discovery provide best-in-class flexibility and scale for distributed deployment architectures.

Zero trust

Legacy API management solutions focus on securing public APIs while ignoring internal API traffic and third-party API consumption. An Omni gateway approach establishes a zero trust security posture, providing automatic authentication, authorization, encryption, and audit on all API traffic regardless of direction.

Multi-protocol

Modern API connectivity requires support for a wide range of Layer 4 and Layer 7 protocols. Solo Enterprise for kgateway's robust support for HTTP, TCP, and event-driven protocols provides a unified solution across all API interfaces.

LLM integration

Agentgateway supports advanced features for integrating with LLM API providers, including credential management, prompt guardrails, RAG, semantic caching, and much more. See agentgateway for more details.

Cloud connectivity done right