Exposing your Kubernetes Services to web traffic involves many moving parts, especially on AWS. You have to worry about all kinds of config to allow ingress to your applications, including Network Load Balancers (NLBs), their associated controllers on the EKS cluster, and, most importantly, dealing with DNS.

Luckily, there’s a great community project called external-dns, which exposes Kubernetes Services and Ingresses with any public DNS provider, including Route53. Also supported is the Kubernetes Gateway API, an interface that Gloo Gateway will support starting in version 1.17, allowing us to dynamically create/update/delete Route53 DNS hostnames whenever we create an HttpRoute object. This takes care of keeping DNS records in sync with our application’s FQDN.

The following tutorial will walk us through the setup of external-dns with Route53. It will also demonstrate how easy it is to integrate Gloo Gateway with external-dns, giving us an easy mechanism to synchronize DNS hostnames with our delegated HttpRoutes, without having to maintain them in two separate places.

Prerequisites

- An AWS account

- AWS CLI

- Gloo Gateway v1.17 installed on an EKS cluster. The quickest path is to follow these instructions

Method

We need to create IAM Policy, k8s Service Account, and IAM Role and associate them together for the external-dns pod to add or remove entries in AWS Route53 Hosted Zones.

Set up external-dns and Route53

- Create IAM Policy. This IAM policy will allow the external-dns pod to add, remove, and update DNS entries (Record Sets in a Hosted Zone) in AWS Route53 service.

a. Via the AWS Web UI, go to Services -> IAM -> Policies -> Create Policy.

b. Click on JSON tab and copy/paste the below JSON snippet.

c. Click on Visual Editor tab to validate.

d. Click on Review Policy.

e. Name: AllowExternalDNSUpdates.

f. Description: Allow access to Route53 Resources for ExternalDNS.

g. Click on Create Policy.

- Create IAM Role, k8s Service Account, and Associate IAM Policy. As part of this step, we are going to create a k8s Service Account named external-dns and also a AWS IAM role and associate them by annotating the role ARN in the Service Account. In addition, we are also going to associate the AWS IAM Policy AllowExternalDNSUpdates to the newly created AWS IAM Role.

a. Create IAM Role, k8s Service Account, and Associate IAM Policy. Don’t forget to replace the placeholders.

- Verify CloudFormation (CFN) Stack

a. Go to Services ->CloudFormation.

b. Verify the latest CFN Stack created.

c. Click on Resources tab.

d. Click on link in Physical ID field, which will take us to IAM Role directly.

- Verify IAM Role & IAM Policy

a. With the above step in CloudFormation, we will land in an IAM Role created for external-dns.

b. Verify in Permissions tab we have a policy named AllowExternalDNSUpdates.

c. Now make a note of that Role ARN, as this we need to update the External-DNS k8s manifest with.

arn:aws:iam::180789647333:role/eksctl-eksdemo1-addon-iamserviceaccount-defa-Role1-1O3H7ZLUED5H4

- Update External DNS Kubernetes manifest

a. Update the following template placeholder domain-filter [line-69] with your Route53 DNS name eg. solo-wlm.net

Install Gloo Gateway on EKS

- Install the AWS load balancer controller on your EKS machine using these instructions.

- Using your newly installed EKS cluster, install Gloo Gateway using this Getting Started guide up to step 6.

- For step 7 of the Getting Started guide onwards, there are specific load balancer annotations required for EKS NLB’s to be assigned correctly. We’ll put them into the gateway parameters CR then apply the Gateway resource referring back to the GatewayParameters. You can use the following command:

- Deploy the sample httpbin app:

- Expose the httpbin app using Gloo Gateway. Update the hostnames field to reflect your Route53 domain name.

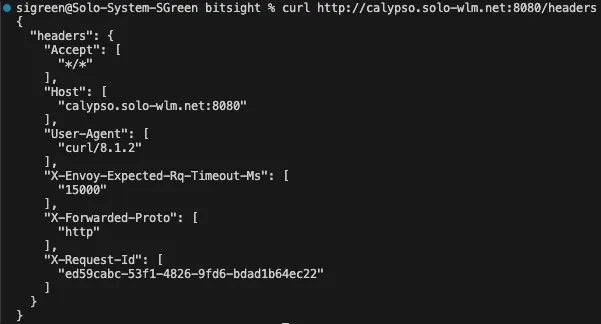

- If the update is successful, you should be able to call your endpoint (with curL) using your dynamically created DNS name:

- Also check the external-dns logs to ensure the updates were successful:

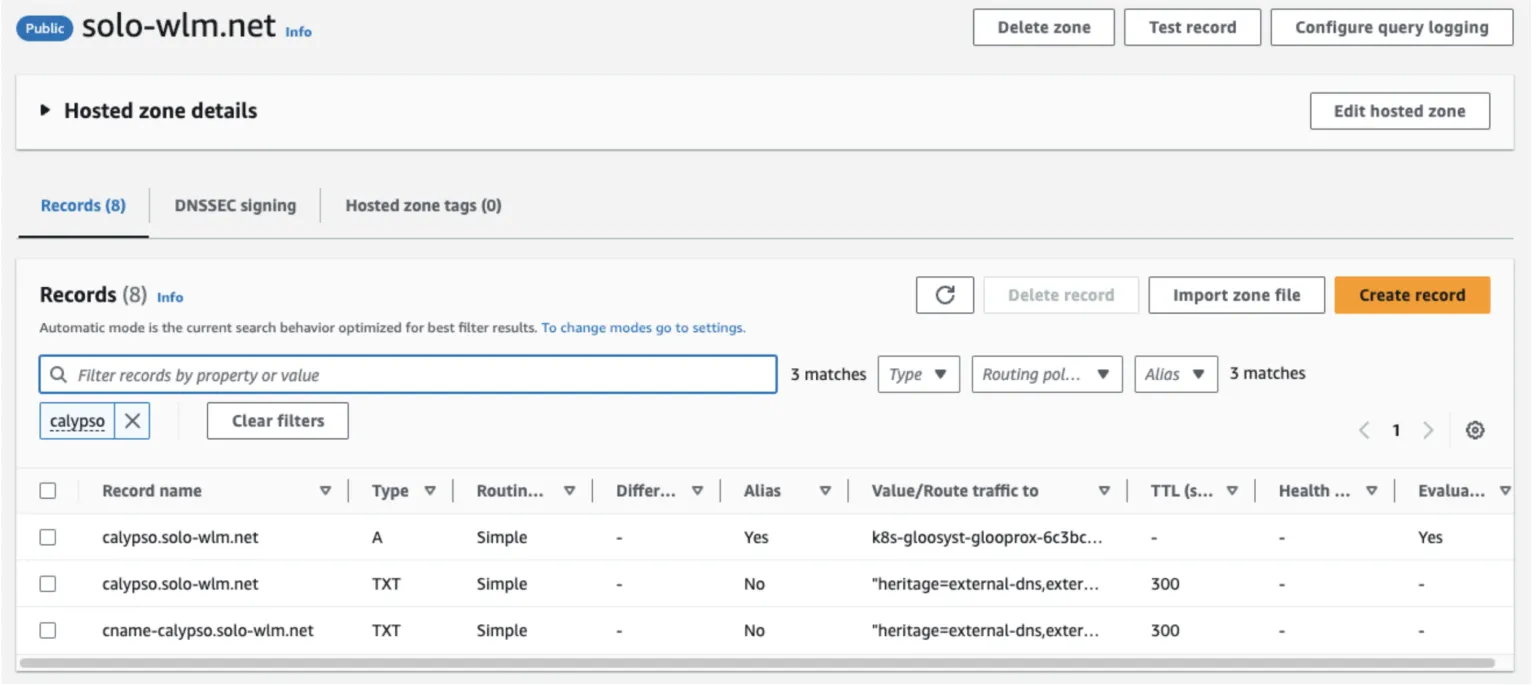

- Via the AWS Web Console, you can see the DNS records created in Route53:

- ** BONUS TASK ** Try deleting your newly created httpbin route (kubectl delete -f httbin-route.yaml -n httpbin) to see what happens in the Route53 console. Is the behavior what you expected?

Explore More Ways to Get Started

This tutorial demonstrates the ease of integrating an external DNS provider like Route 53 with Gloo Gateway, without the headache of worrying about keeping DNS records in sync with one another. For other examples of using external-dns with Gloo Gateway and extending this to integrate with a CertManager, take a look at the External DNS & Cert Manager Getting Started Guide.

%20a%20Bad%20Idea.png)

%20For%20More%20Dependable%20Humans.png)